Being able to reproduce experiments and results is important to advancing our knowledge, but it’s not something we’ve always been able to do well. In a series of guest posts, we have invited perspectives and advice on reproducibility in NLP, this from Antske Fokkens.

Reflections on Improving Replication and Reproduction in Computational Linguistics

(See Ted Pedersen’s Empiricism is not a Matter of Faith for the Sad Tale of the Zigglebottom Tagger)

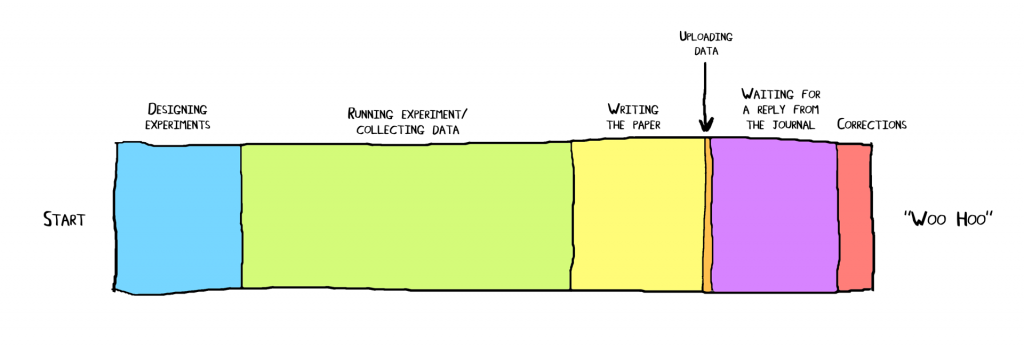

A little over four years ago, we presented our paper Offspring from Reproduction Problems at ACL. The paper discussed two case studies in which we failed to replicate results. While investigating the problem, we found that results differed to an extent that they led to completely different conclusions. The settings, preprocessing and evaluation whose (small) variations led to these changes were not even reported in the original papers.

Though some progress has been made on both the level of ensuring replication (obtaining the same results using the same experiment) as well as reproduction (reach the same conclusion through different means), the problem described in 2013 still seems to apply to the majority of the computational linguistics papers published in 2017. In this blog post, I’d like to reflect on the progress that has been made, but also on the progress we still need to make on the level of publishing both replicable and reproducible research. The core issue around replication is the lack of means provided to other researchers to repeat an experiment carried out elsewhere. Issues around reproducing results are more diverse, but I believe that the way we look at evidence and comparison to previous work in our field is a key element of the problem. I will argue that major steps in addressing these issues can be made by (1) increasing appreciation for replicability and reproducibility in published research and (2) changing the way we use the ‘state-of-the-art’ when judging research in our field. More specifically, good papers provide insight and understanding in a computational linguistics or NLP problem. Reporting results that beat the state-of-the-art is neither sufficient nor necessary for a paper to provide a valuable research contribution.

Replication Problems and Appreciation for Sharing Code

Attention for replicable results (sharing code and resources) has increased in the last four years. Links to git repositories or other version control systems are more and more common and review forms of the main conferences include a question addressing the possibilities of replication. Our research group CLTL has adopted a policy indicating that code and resources not restricted by third party licenses must be made available when publishing. When reading related work for my own research, I have noticed similar tendencies in, among others, the UKP-group in Darmstadt, Stanford NLP and the CS and Linguistics departments of the University of Washington. Our PhD students furthermore typically start by replicating or reproducing previous work which they can then use as a baseline. From their experience, I noticed that the problems reported four years ago still apply today. Results were close or comparable sometimes and once even higher, but also regularly far off. Sometimes provided code did not even run. Authors often provided feedback, but even with their help (sometimes they went as far as looking at our code), the original results could not be replicated. I currently find myself on the other side of the table, with two graduate students wanting to use an analysis from my PhD and the (openly available) code producing errors.

There can be valid reasons for not sharing code or resources. Research teams from industry have often delivered interesting and highly relevant contributions to NLP research and it is difficult to obtain corpora from various genres without copyright on the text. I therefore do not want to argue for less appreciation for research without open source code and resources, but I would very much want to advocate for more appreciation for research that does provide the means for replicating results. In addition to being openly verifiable, it also provides additional means for other researchers to build their work directly upon previous work rather than first going through the frustration of reimplementing a new baseline system good enough to test their hypotheses on.

The General Reproducible and Replicable State-of-the-Art

Comparing performance on benchmark systems has helped in gaining insight into the performance of our systems and in comparing various approaches. Evaluation in our field is often limited to testing whether an approach beats the state-of-the-art. Many even seem to see this as the main purpose to the extent that reviewers rate papers down that don’t beat the state-of-the-art. I suspect that researchers often do not even bother to try and publish their work if performance remains below the best reported. The purpose of evaluation actually is, or should be, to provide insight into how a model works, what phenomena it captures or which patterns the machine learning algorithm picked up, compared to alternative approaches. Moreover, the difficulties involved in replicating results make the practice of judging research on whether it beats the state-of-the-art rather questionable. Reported results may be misleading regarding the actual state-of-the-art. In general, papers should be evaluated based on what they teach us, i.e. whether they verify their hypothesis by comparing it to a suitable baseline. A suitable baseline may indeed mean a baseline that corresponds to the state-of-the-art, but this state-of-the-art should be a valid reflection of what current technologies do.

I would therefore like to introduce the notions of the reproducible state-of-the-art and the generally replicable state-of-the-art. These two notions both aim at gaining better insight into the true state-of-the-art and making building on top of that more accessible to a wider range of researchers. I understand a ‘reproducible state-of-the-art’ to be a result obtained by different groups of researchers independently which increases the likelihood of providing a reliable result and a baseline that is feasible to reproduce for other researchers. This implies having more appreciation for papers that come relatively close to the state-of-the-art without necessarily beating it. Chances of results being reproducible also increase if they hold across datasets and can be obtained by multiple machine learning runs (e.g. if they are relatively stable across different initiations and order of processing training data by a neural network). The ‘generally replicable state-of-the-art’ refers to the best reported results obtained by a fully available system and, preferably, one that can be trained and run using computational resources available to the average NLP research group. One way to obtain better open source systems and encourage researchers to share their resources and code is by instructing reviewers to appreciate improving the new generally replicable state-of-the-art (with open source code and available resources) as much as improving the reported state-of-the-art.

Understanding Computational Models for Natural Language

In the introduction of this blog, I claimed that improving the state-of-the-art is neither necessary nor sufficient for providing an important contribution to computational linguistics. NLP papers often introduce an idea and show that by adding the features or adapting the machine learning approach associated with that idea improves results. Many authors take the improved results as evidence that the idea works, but this is not necessarily the case: improvement can be due to other differences in settings or random variations. The outcome becomes much more convincing if the hypothesis correctly predicts which kind of errors the new approach would solve compared to the baseline. For instance, if you predict that reinforcement learning reduces error propagation, investigate the error propagation in the new system compared to the baseline. Even if it is difficult to predict where improvement comes from, a decent error analysis showing which phenomena are treated better than by other systems, which perform as good or bad and which have gotten worse can provide valuable insights into why an approach works or, more importantly, why it does not. This has several advantages: first of all, if we have better insights into what information and which algorithms help for similar and which for different phenomena, we have a better idea of how to further improve our systems (for those among you who are convinced that achieving high f-scores is our ultimate goal). It becomes easier to publish negative results , which in turn promotes progress by preventing other research groups from going down the same pointless road without knowing of each other’s work. We may learn whether an approach works or does not work due to particularities of the data we are working with. Moreover, an understood result is more likely to be a reproducible result and even if it is not, details about what is working exactly may help other researchers to find out why they cannot reproduce it. In my opinion, this is where our field fails most: we are too easily satisfied when results are high and do not aim for deep insight frequently enough. This aspect may be the hardest to tackle from the points I have raised in this post. On the upside, addressing this is not made impossible by licenses, copyright and commercial code.

Moving Forward

As a community, we are responsible for improving the quality of our research. Most of the effort will probably have to come from bottom up: individual researchers can decide to write (only) papers with a solid methodological setup, and that aim for insights in addition to or even rather than high f-scores and provide code and resources whenever allowed. They can also decide to value papers that follow such practices more and be (more) critical of papers that do not provide insights or good understanding of the methods. Initiatives such as the workshops Analyzing and Interpreting Neural Networks for NLP, Building and Breaking, Ethics in NLP, Relevance of Linguistic Structure in Neural NLP (and many others) show that the desire to obtain better understanding is very much alive in the community.

Researchers serving as program chairs can play a significant role in further encouraging authors and reviewers. The categories of best papers proposed for COLING2018 are a nice example of an incentive that appreciates a variety of contributions to the field. The main conference’s review forms have included questions about resources provided by the paper. Last year, however, the option ‘no code or resources provided’ was followed by ‘(most submissions)’. As a reviewer, I wondered: why this addition? We should at least try to move towards a situation that providing code and resources is normal or maybe even standard. The new NAACL form refers to the encouragement of sharing research for papers introducing new systems. I hope this will also be included for other paper categories and that the chairs will connect this encouragement to a reward for authors who do. I also hope chairs and editors, for all conferences, journals and workshops, will remind their reviewers of the fragility of reported results and remind them to take this into consideration when verifying if empirical results are sufficient compared to related work. Most of all, I hope many researchers will feel encouraged to submit insightful research with low as well as high results and I hope to learn much from it.

Thank you for reading. Please share your ideas and thoughts: I’d specifically love to hear from researchers that have different opinions.

Antske Fokkens

https://twitter.com/antske

Acknowledgements I’d like to thank Leon Derczynski for inviting me to write this post. Thanks to Ted Pedersen (who I have never met in person) for that crazy Saturday we spent hacking across the ocean to finally find out why the original results could not be replicated. I’d like to thank Emily Bender for valuable feedback. Last but not least, thanks to the members of the CLTL research group for discussions and inspiration on this topic as well as the many many colleagues from all over the world I have exchanged thoughts with on this topic over the past four years!